ICCV 2019 Tutorial onInterpretable Machine Learning for Computer Vision |

||

Auditorium, COEX Convention Center, Seoul, Korea |

||

ICCV 2019 Tutorial onInterpretable Machine Learning for Computer Vision |

||

Auditorium, COEX Convention Center, Seoul, Korea |

||

Complex machine learning models such as deep convolutional neural networks and recursive neural networks have recently made great progress in a wide range of computer vision applications, such as object/scene recognition, image captioning, visual question answering. But they are often perceived as black-boxes. As the models are going deeper in search of better recognition accuracy, it becomes even harder to understand the predictions given by the models and why.

Continuing from the 1st Tutorial on Interpretable Machine Learning for Computer Vision at CVPR’18 where more than 1000 audience attended, this tutorial aims at broadly engaging the computer vision community with the topic of interpretability and explainability in computer vision models. We will review the recent progress we made on visualization, interpretation, and explanation methodologies for analyzing both the data and the models in computer vision. The main theme of the tutorial is to build up consensus on the emerging topic of the machine learning interpretability, by clarifying the motivation, the typical methodologies, the prospective trends, and the potential industrial applications of the resulting interpretability.

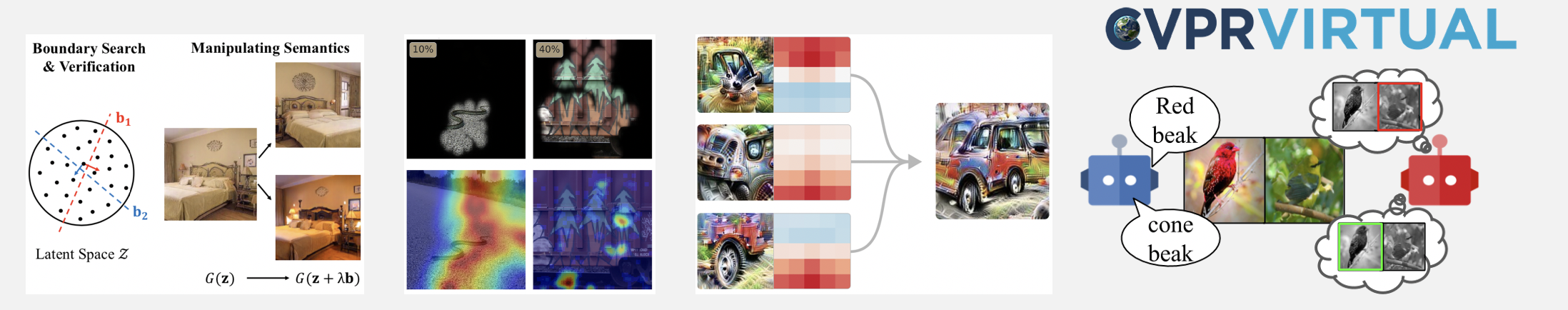

14:00 - 14:45 . Talk 1 by Andrea Vedaldi: Understanding Models via Visualization and Attribution slide

14:45 - 15:30 . Talk 2 by Bolei Zhou: Understanding Latent Semantics in GANs slide

15:30 - 16:00. Coffee Hour

16:00 - 16:45 . Talk 3 by Alan L. Yuille: Deep Compositional Networks slide

16:45 - 17:30 . Talk 4 by Alexander Binder: Explaining Deep Learning for Identifying Structures and Biases in Computer Vision slide

A great success! Prof.Alan Yuille is giving the tutorial in the photo. Thanks everyone for the participation.

Please contact Bolei Zhou if you have question.